|

||||||||||||||||||||||||

|

||||||||||||||||||||||||

|

||||||||||||||||||||||||

| Gridjam Slide show | ||||||||||||||||||||||||

|

||||||||||||||||||||||||

|

||||||||||||||||||||||||

| Gridjam is a real-time, geographically distributed, networked multimedia event. It is an experimental project that brings together a visual artist, composer, musicians and computer scientists, while using the new high speed international LambdaRail network. Gridjam will demonstrate real-time, low latency, interactive, distance computing through the complexity of the live, partly improvised, 3D visualized, musical performance, being both a world-class work of art and a research project into high performance collaborative network computing. | ||||||||||||||||||||||||

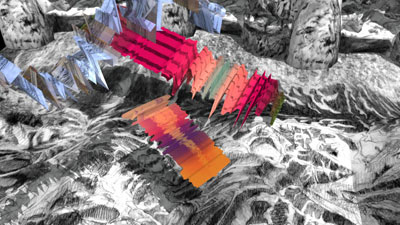

| Gridjam will utilize Jack Ox and David Britton’s Virtual Color Organ™, visualizing Alvin Curran’s music performed by musicians located in four distant locales but connected via next generation networking technologies. The Virtual Color Organ™ (VCO) is a 3D immersive environment in which music is visually realized in colored and image-textured shapes as it is heard. The visualization remains as a 3D graphical sculpture after the performance. The colors, images, shapes and even the motions and placement of the visualized musical shapes are governed by artist-defined metaphoric relationships, created by hand as aesthetic and symbolic qualities rather than algorithmically. The VCO visually illustrates the information contained in the music’s score, the composer’s instructions to the musicians, and the musicians contributions to the score as they improvise in reaction to each other’s performances and to the immersive visual experience. Illustrative of synesthesia and intermedia, the VCO displays the emergent properties within the meaning of music, both as information and as art. |

||||||||||||||||||||||||

|

|

|||||||||||||||||||||||

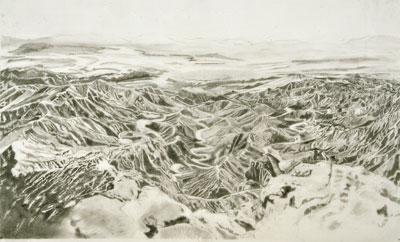

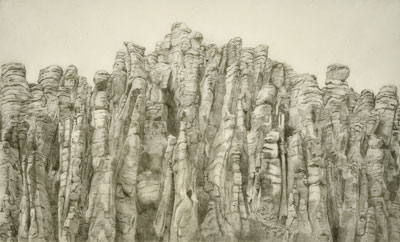

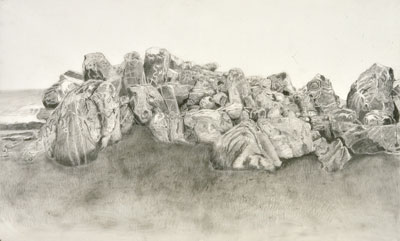

| Ox created the original drawings of deserts in California and Arizona from which the Desert Organ Stop was modeled, commissioned Richard Rodriguez to model the landscape, and created the hand drawn texture maps appearing in the virtual reality world. The VCO is capable of having multiple visual organ stops. An organ stop on a traditional organ is a voice that affects the entire keyboard of notes. An organ stop in the VCO is the 3D immersive environment in which the visualized music will exist and also the visual vocabulary applied to the musical objects. | ||||||||||||||||||||||||

|

||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||

|

|

|||||||||||||||||||||||

|

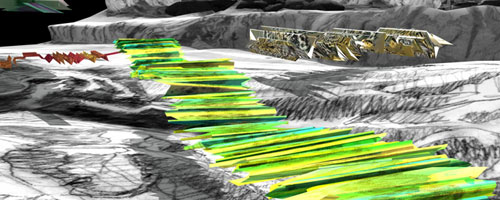

The 21st C. Virtual Color Organ™ The VCO is a computational system for translating musical compositions into visual performance. This interactive instrument consists of three basic parts: 1. A set of systems or syntax that provides transformations from a musical vocabulary to a visual one. 2. A 3D visual environment that serves as a performance space and the visual vocabulary from which the 3D environment was modeled. This visual vocabulary consists of landscape and/or architectural images and provides the objects on which the syntax acts. 3. A software environment that serves as the engine of interaction for the first two parts. The VCO is capable of having multiple visual organ stops. An organ stop on a traditional organ is a voice that affects the entire keyboard of notes. An organ stop in the VCO is the 3D immersive environment in which the visualized music will exist and also the visual vocabulary applied to the musical objects. GridJam will take place in the black and white, hand drawn desert sand and rock structures coming from real deserts in California and Arizona. The original drawings by Ox, from which the 3D modeling was made, serve as the basic texture maps for all of the musical object. |

||||||||||||||||||||||||

|

||||||||||||||||||||||||

|

||||||||||||||||||||||||

|

GridJam: The Visualization The original building blocks of GridJam come from a list of almost 200 collected sounds belonging to Curran. The sound files include John Cage reciting short phrases, Maria Calas singing a high note, animal sounds, objects like coins being tossed, and various musical sounds etc. Ox put them through a Max program creating graphs of melody and dynamics. She sorted them into 8 groups based on these simple visualizations and made 3D models reflecting melody from the front and dynamics on the top of the object. There are eight groups because there are eight landscape texture maps. Each object in a group has as the base of the texture map the same hand drawn landscape picture. The colors and materials are based on the timbre of each specific sound sample. These colors can be rather complicated gradients, often in layers. If the sound sample has a sequence of vowel sounds then the colors in the gradient will show these based on Ox’s color system, which defines how and where vowels are made in the vocal track. Other timbre colors come from the extensive list of timbre created by Ox. The 3D objects made by Ox from Curran’s collected sound files will be able to appear in small bits of the whole, based on the duration of the played sound. If the Disklavier key remains depressed for longer than the actual sound file, the object will begin to appear for a second time, moving along the time line. The time line follows a path from the center of the virtual desert out, until it curves up and back towards the center. .Before reaching the center it will curve up again and move outward once more, repeating this pattern for as many times as necessary in order to accommodate the playing. Because of this path, time will move both horizontally and vertically. At the end of the performance the visualization will remain and can be more thoroughly inspected as a place with moving objects in a black and white desert environment. We will also have recorded the entire multimedia experience so that it can be played back in digital environments, such as the ever more ubiquitous digital planetarium theaters. |

||||||||||||||||||||||||

|

The Music: by Alvin Curran Curran has come up with a plan for the musical part of this project which is inspired both by the pure fundamental "synesthetic" goals of Ox’s visual structures as by the technical and theatrical nature of the domed projection spaces, the local accoustics and "global" nature of the work itself. The music itself will be composed using a fluid mix of structured indeterminacy, syncronized composition, spontaneous music structures, and live electronic processing. But the essence of the music - whose goal is - to become interchangeable with the images is to create another set of "images" real and sonic, which is the music-theater itself. The L-Rail communicatioins will enable the complete syncronization of the dislocated music groups and their ability to react as if in the same room together. The choice of instruments, will become another prime visual element imbedded in the rich texts of Jacks desert and VR sound-objects. The music shall be performed by the Del Sol string quartet, Anthony Braxton on the saxophone, and Curran on the disklavier (playing sounds which have been modeled in MAYA by Ox over the last four years. These sounds will be subjected to processing device filters (by Tom Erbe/Sound Hack), e.g. granulators. The 3D objects will go into perpetual movement patterns that metaphorically represent the filters’ processing patterns. The music (gestures and typologies) itself will range from almost inaudible sparse noises to discreet isolated tones to pulsing synchronous rhythms to clouds and walls of sound. characterizing the music throughout will be the presence of recorded (natural) sounds from animals, people and machines and phenomena from around the world (which however embedded in the texture, are at the source of the visual materials generated)... Midi information from the processing devices will also serve as real-time transformers of some aspects of the visual objects.

|

||||||||||||||||||||||||

|

Institutional Participants with Deep Vision Venues Gridjam, in partnership with the ARTSLab, connected physically to the High Performance Computing Center at the University of New Mexico, is in position to forge the path to these visionary new technologies. We are also partnering with CAL(IT)2 at the University of California, San Diego, and the University of Alberta. Presently, we are still deciding on other performance and/or audience venues. Recently the Netherlands, through SARA and de Waag Society has come on board as a venue at the "pakhuis de Zwijger". Because the technology is so new, there is a different situation in every venue. At Cal(IT)2 we have the choice of a six sided CAVE or a large SAGE Wall with audience seating. At UNM we have two dome theaters, powered by Skyskan. The 15’ dome is a research and development facility located in the ARTSLab itself, and the 55’ dome theater, called the Lodestar, belonging to UNM is located in the Museum of Natural History. The University of Alberta has a full dome theater, and de Waag Society in Amsterdam is just completing an auditorium with a Sage wall similar to that at Calit2. Surely Gridjam will garner much attention as an early demonstration of the magic of the new LambdaRail.

|

||||||||||||||||||||||||

TECHNICAL STRUCTURE |

||||||||||||||||||||||||